Artificial Intelligence SIG

Using Amazon Bedrock to Run OpenAI-Compatible Applications

Introduction

OpenAI is a leading artificial intelligence research and deployment company. Founded in December 2015, it is known for creating advanced AI models, such as the GPT (Generative Pre-trained Transformer) series, and developing safe, useful, and widely accessible AI technologies. The OpenAI API allows developers to integrate powerful AI models—like GPT-4 for natural language understanding, DALL·E for image generation, and Whisper for speech recognition—into their own applications, websites or services, enabling a wide range of innovative and intelligent solutions.

OpenAI offers a variety of powerful APIs that allow developers to harness advanced AI capabilities in their applications. Among these are the Responses API, which provides straightforward text completions; the Chat Completions API, designed for more interactive and conversational experiences; and the Realtime API, which enables faster, low-latency interactions ideal for live applications. Additionally, the Assistants API allows for the creation of goal-oriented AI agents that can handle complex tasks, while the Batch API supports the processing of large volumes of requests efficiently. Together, these APIs provide flexible and scalable tools to integrate cutting-edge AI into a wide range of digital products and services

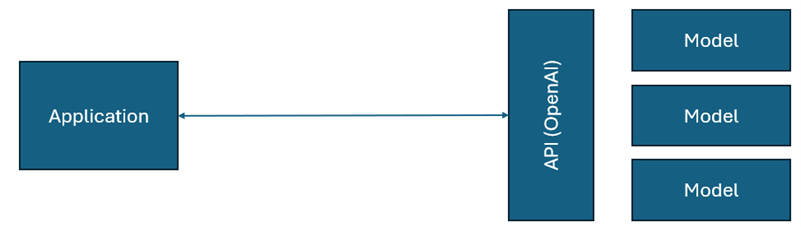

A typical OpenAI-powered application works by sending structured JSON payloads to a set of standardised API endpoints provided by OpenAI. These endpoints correspond to specific services—such

as text generation, conversation handling or image creation—and are designed to receive input data, process it using advanced AI models, and return relevant responses (Figure 1).

Due to the popularity of OpenAI's API, other providers have begun offering compatible APIs, such as:

Bedrock Access Gateway

Amazon Bedrock is a fully managed service by Amazon Web Services (AWS) that allows developers to build generative AI applications using a variety of foundation models—including those from Anthropic, Meta, Mistral, Cohere, and Amazon—without the complication of managing infrastructure.

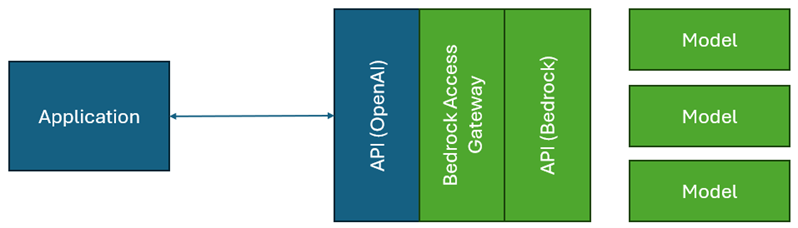

However, Amazon Bedrock does not natively support OpenAI-compatible API endpoints. To bridge this gap, AWS provides a community-supported project: Bedrock Access Gateway [1].

This project acts as a middleware layer to emulate OpenAI API endpoints and forward requests to Bedrock models (Figure 2)

Setup

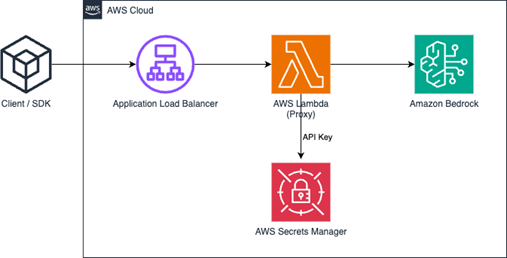

There are three main deployment options available for setting up the Bedrock Access Gateway: (1) using AWS CloudFormation with Lambda, (2) using CloudFormation with Fargate, or (3)

running the gateway locally. The first option—CloudFormation with Lambda—offers a straightforward setup process and is generally more cost-effective. Once the deployment is complete,

the system architecture will resemble what is shown in Figure 3.

The general steps for setting up the Bedrock Access Gateway using AWS CloudFormation with Lambda, are: Start by selecting a region that supports Amazon Bedrock, such as us-east-1. Then, create an API key in AWS Secrets Manager and store it securely. Next, request access to the models needed through the Amazon Bedrock Console, and deploy the CloudFormation stack using the link in the Bedrock Access Gateway [https://github.com/aws-samples/bedrock-access-gateway]. Finally, once the deployment is complete, retrieve the API endpoint from the CloudFormation stack for use in your applications.

Pros and Cons of Using Bedrock Access Gateway

The main advantage of using the Bedrock Access Gateway include consolidated billing, which allows centralised tracking of costs within AWS, and the ability to leverage AWS credits - particularly beneficial for startups in the AWS Activate programme. Additionally, users can access a wider variety of models that may not be available through OpenAI, along with the added benefits of AWS services such as IAM-based security, CloudWatch logs and VPC networking, all of which enhance security and monitoring.

However, there are some drawbacks to consider. The setup introduces increased complexity by adding an extra layer of translation and infrastructure management. There may also be slight latency in request and response times, and not all OpenAI endpoints may be fully compatible with

the system. Lastly, while the hosting costs are minimal, they still add another component to maintain within the infrastructure.

Summary

For teams already building AI applications using the OpenAI API format, the Bedrock Access Gateway provides a bridge to AWS’s secure and flexible ecosystem. It allows developers to take advantage of AWS-native benefits—such as consolidated billing, enterprise governance, and broader model access—without rewriting existing codebases. While it introduces some overhead, the trade-off may be worth it, especially in enterprise or multi-cloud contexts where control, compliance, and cost tracking matter.

Contact Information: Woon Tong Wing

School of Information Technology

Nanyang Polytechnic

E-mail: [email protected]

References

- 1. https://github.com/aws-samples/bedrock-access-gateway

Mr Woon Tong Wing is a Senior Lecturer at Nanyang Polytechnic's School of Information Technology (SIT), where he leads the development of cloud computing curricula. As Chief Coach for the WorldSkills cloud computing trade, he trains NYP competitors for the WorldSkills competition, leading them to medal wins. He is also a member of the Information Technology Standards Committee (ITSC) for Cloud Computing and holds multiple industry certifications in cloud technology, cybersecurity and AI.